Dare HEC Media Hub

Who Will Win the Tug-of-War Between Europe and Big Tech?

7 minutes

Klaus Miller, Daniel Brown

Italy’s fine against Apple’s App Tracking Transparency (ATT) puts privacy design under antitrust scrutiny. Evidence sugg…

Italy’s fine against Apple’s App Tracking Transparency (ATT) puts privacy design under antitrust scrutiny. Evidence suggests regulators should measure who is harmed, and design tar…

Insights You Need

A target price may overshoot reality, but it still hits the stock quickly. New research shows that one number can accelerate market impact.

7 minutes

Alexandre Madelaine, Luc Paugam, Hervé Stolowy

A new study shows that “quality signals” can backfire: Academy Award nominations can lower viewers’ ratings by raising expectations.

7 minutes

Michelangelo Rossi, Felix Schleef

Some encounters change destinies. Keep your mind open.

Inspiring voices

94 minutes

Leaders speaking openly about their vulnerability

HEC Paris Sustainability & Organizations Institute

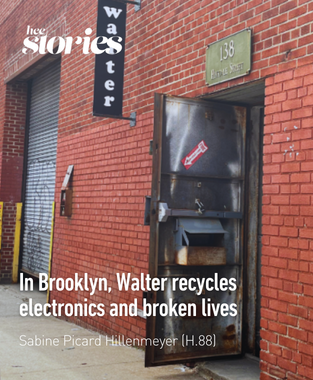

Stories

What the Homeless Taught Us About Dignity

HEC Paris Sustainability & Organizations Institute

Jean-Marc Semoulin: Trust as a Political Act

HEC Paris Sustainability & Organizations Institute

HEC Startups

Think sharper. Grasp what matters. Solve better.

Reskill Masterclass

32 minutes

How Firms Value Sales Career Paths?

Dominique Rouziès

Knowledge

7 minutes

Why CEOs Can No Longer Ignore Social Cohesion

Bénédicte Faivre-Tavignot

7 minutes

How Activist Short Sellers Move a Stock

Alexandre Madelaine, Luc Paugam, Hervé Stolowy

7 minutes

Who Will Win the Tug-of-War Between Europe and Big Tech?

Klaus Miller, Daniel Brown

7 minutes

When Oscar Nominations Make Audiences Harsher

Michelangelo Rossi, Felix Schleef

Overlooking Vulnerability Can Harm Everyone, Including Your Business

Octavio Augusto de Barros

Decoding

Enough talk. Join the doers. Make change happen.

HEC Startups

Features

April 2025

AI Technology: On a Razor's Edge?

AI challenges creativity, governance, jobs, and trust, raising urgent questions across every sector.

January 2025

Saving Lives in Intensive Care Thanks to AI

Julien Grand-Clément

AI Accuracy Isn’t Enough: Fairness Is Now Essential

Christophe Pérignon

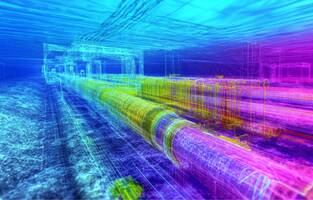

AI and Sovereignty: The Geopolitical Power of Submarine Cables

Olivier Chatain, Jeremy Ghez

Growth or Human Ties

The Selection By

Isaline Rohmer

Isaline Rohmer is a consultant and member of the Omnicité cooperative. She coordinates a research program for HEC Paris on social entreprene…

December 1st, 2025

Podcasts

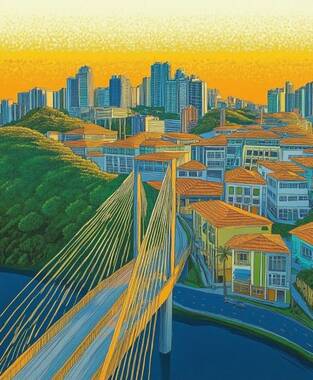

Voices Creating New Narratives and Shaping the Future of Megacities

Inside São Paulo: Building Bridges Toward Shared Prosperity

HEC Paris Sustainability & Organizations Institute

41 minutes

Inside Mumbai: Bridging Divides in a City of Extremes

HEC Paris Sustainability & Organizations Institute

Inside Cairo: A Layered City Where Past and Future Intersect

HEC Paris Sustainability & Organizations Institute

38 minutes

Inside Kinshasa: The Forces Powering Africa's Largest Urban Future

HEC Paris Sustainability & Organizations Institute

27 minutes

HEC researchers unpack their latest findings on real-world challenges

How Platform Architecture and Oscar Nominations Influence Our Choices

Michelangelo Rossi

32 minutes

Videos

Videos that break down big ideas in minutes

Leading figures with ideas that matter to elevate the debate

94 minutes

Leaders speaking openly about their vulnerability

HEC Paris Sustainability & Organizations Institute

Live masterclasses to learn new skills and grow professionally

Students’ perspectives on what matters today and what drives them

For curious minds seeking research-based insights and inspiring stories that help make sense of a world in transition, and unlock solutions within a purpose-driven community.