Key Findings:

- AI enhances HR productivity, particularly in administrative, legal, and low-stakes recruitment tasks.

- Data limitations weaken impact: HR datasets are often too small or biased to deliver reliable outcomes.

- Lack of governance creates risk: Overuse of gimmicky tools without oversight may erode employee trust in HR.

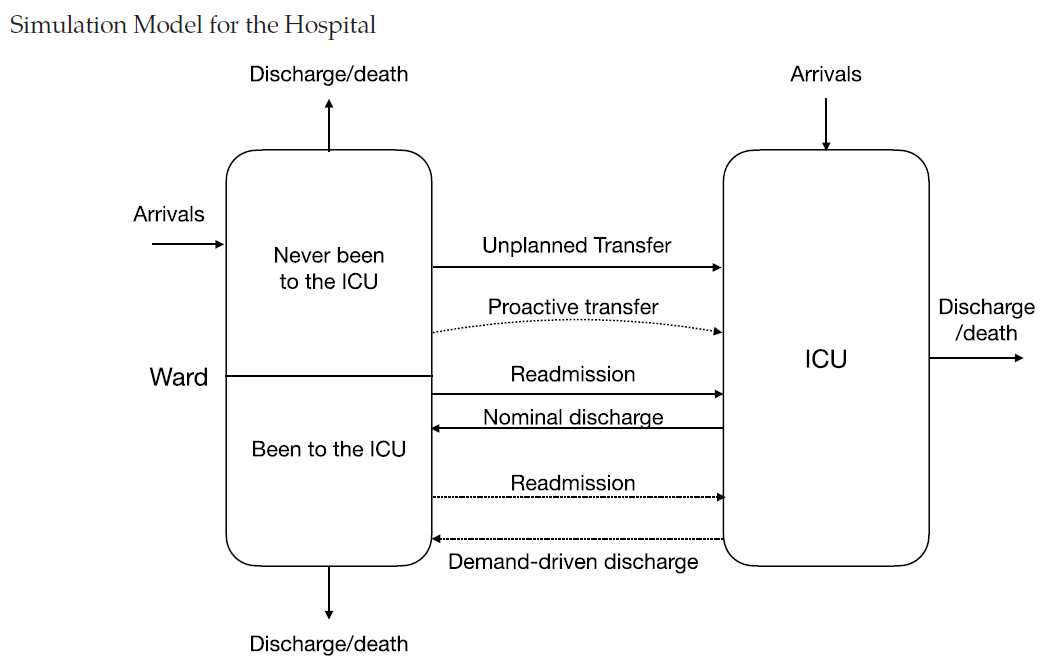

Based on a survey carried out among HR managers and digitalization project managers working in major companies, I recall three potential pitfalls regarding the data used, the risk of turning AI into a gimmick, and algorithmic governance. But first, let's remind what do we mean by AI.

What Do We Mean By AI?

The term artificial intelligence is polysemous, just as AI itself is polymorphic. Hidden behind AI’s vocabulary – from algorithms, conversational AI and decisional AI to machine learning, deep learning, natural language processing, chatbots, voicebots and semantic analysis – is a wide selection of techniques, and the number of practical examples is also rising fast.

There’s also a distinction to be made between weak AI (non-sensitive intelligence) and strong AI (a machine endowed with consciousness, sensitivity and intellect), also called "general artificial intelligence" (a machine that can apply intelligence to any problem rather than to a specific problem). "With AI as it is at the moment, I don’t think there’s much intelligence, and not much about it is artificial... We don’t yet have AI systems that are incredibly intelligent and that have left humans behind. It’s more about how they can help and deputize for human beings" (Project manager).

HR Teams Embrace AI to Boost Productivity

For HR managers, AI paves the way to time and productivity savings alongside an "enhanced employee experience" (HR managers). For start-ups (and there are 600 of them innovating in HR and digital technology, including around 100 in HR and AI), the HR function is "a promising market".

Administrative and Legal Support: Helping Save Time

AI relieves HR of its repetitive, time-consuming tasks, meaning that HR staff, as well as other teams and managers, can focus on more complex assignments.

Many administrative and legal help desks are turning to AI (via virtual assistants and chatbots) to respond automatically to questions asked by employees – "Where is my training application?" or "How many days off am I entitled to?" – in real time and regardless of where staff are based. AI refers questioners to the correct legal documentation or the right expert. EDF, for example, has elected to create a legal chatbot to improve its performance with users.

The chatbot is responsible for the regulatory aspects of HR management: staff absences, leave, payroll and the wage policy: "We had the idea of devising a legal chatbot to stop having to answer the same legal and recurring questions, allowing lawyers to refocus on cases with greater added value. In the beginning, the chatbot included 200 items of legal knowledge, and then 800... Users are 75% satisfied with it" (Project manager).

AI can help find the correct legal documentation or the right expert, AI can check the accuracy of all declarations, AI can personalize social benefits based on employee profiles.

AI isn’t just employed for handling absences and leave or processing expense reports and training but also for the administrative and legal aspects of payroll and salary policy. For pay, AI systems can be used to issue and check the accuracy and consistency of all declarations. In another vein, AI offers packages of personalized social benefits based on employee profiles.

Recruitment Is an AI Testing Ground

Recruitment: Helping to Choose Candidates

Recruitment is another field where AI can be helpful: it can be used to simplify the search for candidates, sift through and manage applications, and identify profiles that meet the selection criteria for a given position.

Chatbots can then be used to talk to a candidate in the form of pre-recorded questions, collecting information about skills, training and previous contracts. "These bots are there to replace first-level interactions between the HR manager or employees and candidates. It frees up time so they can respond to more important issues more effectively" (Project manager).

Algorithms analyze the content of job offers semantically, targeting the CVs of applicants who are the best match for recruiters' expectations in internal and external databases via professional social networks such as LinkedIn. CV profiles that were not previously pre-selected can then be identified.

Unilever has been using AI in tandem with a cognitive neuroscience approach to recruiting since 2016. Start-ups offer a service where they highlight candidate profiles without the need for CVs, diplomas or experience. Their positioning is based on affinity and predictive matching or building smart data.

These tools are aimed primarily at companies with high volumes of applications to process, such as banks for customer service positions or large retailers for departmental supervisors. Candidates are notified about the process and give their permission for their smartphone’s or computer’s microphone and camera to be activated.

These techniques appear to be efficient for low-skilled jobs and markets that are under pressure. At the same time, the more a position calls for high, complex skills, the greater the technology’s limitations.

While new practical examples are emerging over time, the views of corporate HR managers and project managers diverge regarding the added value of AI for recruitment. Some think that AI helps generate applications; identifies skills that would not have been taken into account in a traditional approach; and provides assistance when selecting candidates. Others are more circumspect: it’s possible as things stand, they argue, that people are "over promising" in terms of what AI can bring to the table.

Training and Skills: Personalized Career Paths

The AI approach to training involves a shift from acquiring business skills to customizing career paths. With the advent of learning analytics, training techniques are evolving. Data for tracking learning modes (the time needed to acquire knowledge and the level of understanding) can be used to represent the way people learn and individualize suggestions for skills development.

In addition, AI is used to offer employees opportunities for internal mobility based on their wishes, skills and the options available inside the company. AI start-ups put forward solutions for streamlining mobility management that combine assessment, training and suggestions about pathways, positions and programs for developing skills. These are limited in France, however, with the RGPD (General Data Protection Regulation), although they can be individualized in other countries.

We use AI to identify talent not detected by the HR and managerial teams; and to detect talent with a high risk of leaving the company.

Saint-Gobain has decided to use the potential of machine learning to upgrade the way it manages its talents. A project team with diverse profiles (HR, data scientists, lawyers, business lines, etc.) has been set up with two objectives: to use AI to identify talent not detected by the HR and managerial teams; and to detect talent with a high risk of leaving the company. Confidentiality is guaranteed, and no decision is delegated to machines .

Towards a Better Understanding of Commitment

AI provides opportunities for pinpointing employees who are at risk of resigning or for improving our understanding of the social phenomena in companies.

"We’re going to ask employees questions every week or two weeks in 45 seconds on dozens of engagement levers... The responses are anonymized and aggregated, and – from another perspective – will give indicators to the various stakeholders, the head of HR, the manager, an administrator and so on. We’ll be able to tell what promotes or compromises engagement in real time while offering advice" (Project manager).

Thanks to this application, HR managers and other managers and employees can have use of real-time indicators that show the strengths and weaknesses of the teams without knowing by name who said what. “As well as helping to improve your own work and personal style, these variables mean that, if a corrective action has been put in place, you can tell what its real effect is straightaway” (HR manager).

While artificial intelligence can, as these various examples show, support human resources management, some of these new uses are still being tested.

In addition to the way they are implemented, questions and criticisms remain, including the issue of HR data, return on investment and algorithmic governance.

Governing AI in HR Requires Human Judgment

Data Quality and Restricted Quantity

Data is the key ingredient for AI, and its quality is of paramount importance. If the data injected isn’t good, the results will be vague or distorted. The example of Amazon is emblematic in this respect: after launching its first recruitment software, the company decided to withdraw it quickly from the market because it tended to favor the CVs of men. In fact, the computer program Amazon used was built on resumes the company had received over the last 10 years, most of which were based on male profiles.

In addition, data sets in the field of HR tend to be narrower compared to other areas. Indeed, the number of people employed here – including in large companies – appears to be particularly low compared to the number of purchases made by customers. The quantity of sales observations for an item is very important, and big data applications can be easily performed. But there’s nothing of the sort for HR!

The quantity and quality of data needs to be considered, as well as how to embed it in a context and time interval that encourage analysis and decision-making. This is the case, for instance, in the field of predictive maintenance, where expert systems can detect signs of wear in a machine before human beings: by collecting the appropriate data, just-in-time interventions can be made to overhaul the machinery. It’s a different matter for human systems, however, where the actions and reactions of individuals are not tracked (and should they be?), and may turn out to be totally unpredictable.

Return on Investment and the Dangers of Gimmickry

Investing in AI can be costly, with HR managers concerned about budgets and returns on investment.

"Company websites have to be revamped and modernized on a regular basis... And let's be realistic about it, making a chatbot will cost you around EUR 100,000, while redoing the corporate website will be ten times more expensive... And a chatbot is the ‘in thing’ and gives you a modern image, there’s a lot of hype, and – what’s more – it’s visible for the company! It’s something that can be seen!" (Project manager).

In many organizations, the constraints imposed by the small amount of data, and the few opportunities to iterate a process, raise the question of cost effectiveness. The HR managers we met for our study asked themselves: Should they invest in AI? Should they risk undermining the trust that employees may have in HR just to be one of the early adopters? And, although AI and virtual technology are in the limelight, are they really a priority for HR? Especially since trends come and go in quick succession: version 3.0 has barely been installed before version 4.0 and soon 5.0 come on the scene.

A further danger lies in wait for AI: the descent into gimmickry, a downward spiral which, it must be emphasized, doesn’t just threaten AI but also management tools more broadly. While the major HR software is now equipped with applications that can monitor the veritable profusion of HR indicators, isn’t there still the risk that we’ll drown in a sea of information whose relevance and effectiveness raise questions? Say yes to HR tools and no to gimmicks!

Many start-ups operating in the field of AI and HR often only have a partial view of the HR function. They suggest solutions in a specific area without always being able to integrate them into a company’s distinct ecosystem.

Algorithmic Governance

Faced with the growth in AI and the manifold solutions on offer, HR managers, aware of AI's strengths, are asking themselves: "Have I found HR professionals I can discuss things with? No. Consultants? Yes, but all too often I’ve only been given limited information, repeated from well-known business cases that don’t really mean I know where I‘m setting foot... As for artificial intelligence, I’ve got to say, I haven't come across it.

Based on these observations, I admit I’m not very interested in the idea of becoming a beta tester, blinded by the complexity of the algorithms and without any real ability to compare the accuracy of the outcomes that these applications would give me. Should we risk our qualities and optimal functioning, not to mention the trust our employees put in us, just to be among the early adopters?" (HR manager).

Society as a whole is defined by its infinite diversity and lack of stability. Wouldn’t automating management and decision-making in HR fly in the face of this reality, which is both psychological and sociological? The unpredictability of human behavior cannot be captured in data. With AI, don’t we risk replacing management, analysis and informed choices with an automatism that destroys the vitality of innovation?

What room would there then be for managerial inventiveness and creativity, which are so important when dealing with specific management issues? While the questions asked in HR are universal (how can we recruit, evaluate and motivate…?), the answers are always local. The art of management cannot, in fact, be divorced from its context.

Ultimately, the challenge is not to sacrifice managerial methods but to capitalize on advances in technology to encourage a holistic, innovative vision of the HR function where AI will definitely play a larger role in the future, especially for analyzing predictive data.

See also: Chevalier F (2023), “Artificial Intelligence and Human Resources Management: Practices and Questions” in Monod E, Buono AF, Yuewei Jiang (ed) Digital Transformation: Organizational Challenges and Management Transformation Methods, Research in Management, Information Age Publishing.

"AI and Human Resources Management: Practices, Questions and Challenges", AOM Academy of Management Conference, August 5-9, 2022, Seattle, USA.