AI Technology: On a Razor's Edge?

AI challenges creativity, governance, jobs, and trust, raising urgent questions across every sector.

From digital sovereignty to misinformation, AI is both opportunity and risk - demanding ethical, strategic, and global responses.

Saving Lives in Intensive Care Thanks to AI

HEC Paris research by Julien Grand-Clément reveals that widely used AI mathematical model could reduce ICU mortality by 20% by alerting doctors before patients' conditions worsen.

AI Is Reshaping the Creative Economy

From copyright to competition, HEC Paris and CNRS research by Thomas Paris reveals how AI tools are transforming - and polarizing - the creative sector.

AI Accuracy Isn’t Enough: Fairness Is Now Essential

HEC Paris professor Christophe Pérignon reveals why AI models must be tested for fairness, stability, and interpretability - not just predictive performance.

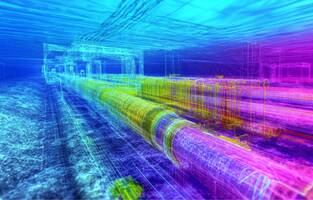

AI and Sovereignty: The Geopolitical Power of Submarine Cables

Two HEC Paris researchers, Olivier Chatain and Jerely Ghez, reveal how AI geopolitics is turning undersea cables into critical fault lines in a fragmented world economy.